TLDR

The parser can be found at https://parser.ksteimel.duckdns.org.

What's with the name?

Mti ([m̩ti]) is the Swahili word for tree. This parser generates trees so it seemed like a nice name.

Motivation

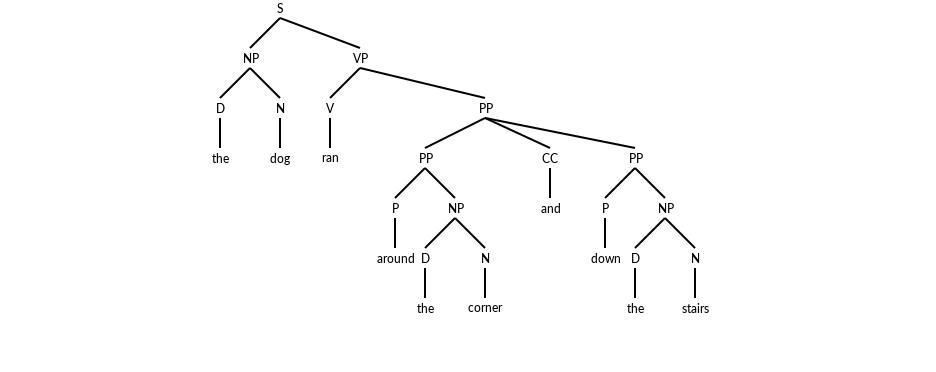

My department in Linguistics had previously used an online parser to illustrate the generative principle. This principle is fundamental to generative grammar. Essentially, the sentences produced by a grammar should be all the sentences in a language and only those sentences. By showing the sentences that would be generated by a grammar that are ungrammatical and the sentences you want to be able to process but are unable to, you are able to tune your grammar to conform to the generative principle.

However, the parser that they had used was not libre software and when the owner was not interested in maintaining it any longer, they lost their teaching tool.

They asked me if I could make a new implementation. I was excited to try because I had wanted to make a context free parser from scratch in Julia. This was the perfect thing to hold my feet to the fire.

Implementation

Mti uses the Earley algorithm to do parsing of Context Free Grammars and the Genie framework for handling web requests.

Parser

The parser used is a version of the Earley algorithm that I wrote in Julia. The code can be found on my personal gitea server.

For an explanation of how this algorithm works, I encourage you to look at the pseudocode section in the above wikipedia link.

There are some areas that can be improved upon in the parser implementation. At the moment, optionality is handled on the rule generation side. Syntactic elements surrounded by parenthesis are optional.

It's simple to see how you would handle optionality with one optional element. For example, if you had the following rule:

NP -> D (Adj) N

Then you would generate one rule where the optional component is there (e.g. NP -> D Adj N) and one rule where the optional component is missing (e.g. NP -> D N).

However, this becomes a problem when you have multiple optional pieces in a sentence. All possible permutations of optional elements have to be considered. For example, if we have this rule:

NP -> D (Adj) N (PP)

then there are four possibilites:

- NP -> D N

- NP -> D Adj N

- NP -> D N PP

- NP -> D Adj N PP

You'll notice that this means each sentence with optional elemnts has n^2 introduced sentences, where n is the number of optional elements added in.

To handle this, a bitmask over optional elements is constructed. If we had three optional elements then we would count to 3^2 (16) in binary and each of these would be a mask over the optional elements.

If the mask is 1, then that optional element is included. Once the optional elements that should be included are calculated, the non-optional elements are woven in.

NP -> (D) (Adj) N (PP)

Optional elements: D Adj PP

| bitmask | introduced rule |

|---|---|

| 000 | N |

| 001 | N PP |

| 010 | Adj N |

| 011 | Adj N PP |

| 100 | D N |

| 101 | D N PP |

| 110 | D Adj N |

| 111 | D Adj N PP |

Why is this problematic?

The issue with this is that it has limitations when moving outside of optionality. For example, if we wanted to represent repetition, the method to do so is unsatisfying. Sure we could easily add some arbitrary number of rules for each repeated element. For example, if we had NP -> Adj* N, we could add a rule for NP -> Adj N, NP -> Adj Adj N, NP Adj Adj Adj N adn so on.

We would have to establish some arbitrary cutoff where we stop doing this though. Technically repetition is supposed to be possible forever (if you believe in infinite strings). However, we have to set some stopping point where we don't continue adding rules, otherwise we would never get to the actual parsing :)

The solution I want to move to going forward is to have the syntactic elements stored in the Earley graph consist of julia types with information about the optionality or repeatability of that element (in addition to feature information for the future implementation of feature grammars). This would move dealing with optionality and repetition into the earley algorithm itself. Instead of generating more rules, the algorithm would simply have the option of ignoring the next syntacitc element (for optionality) or duplicating the current syntactic element (for repetition).

This has mostly been a discussion of the parser used in the web app, the pitfalls of the current implmentation and the directions I want to go instead.

In a future post I will explain how the web framework works and how to install this webapp on your own server

Comments

January 16, 2021 04:23

More to come about this!